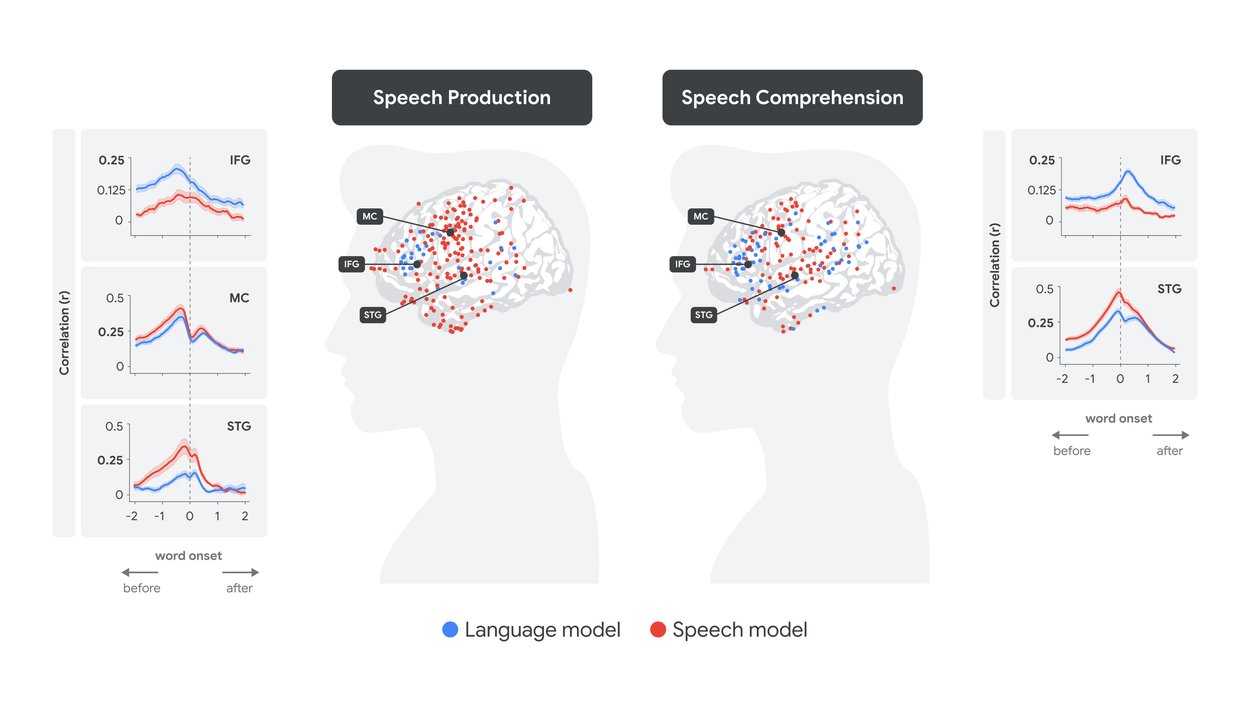

Today I came across the article “Deciphering language processing in the human brain through LLM representations” on the Google Research blog. It claims the neural activation in the brain for understanding and producing speech is similar to that of Whisper, OpenAI’s speech recognition model.

Representing language on the computer, or even better, deciphering how exactly it is represented in the brain, has been the holy grail in linguistics for decades. While the articles highlights many differences as well as similarities, it is nevertheless very interesting research.

I also noticed that they claim “linear” similarity but the correlation values are all under 0.25 i.e. low. So, something similar is happening but not more than that.

As someone with linguistic education who studied languages for many years, I find it fascinating how the machinery has improved in the recent years. It’s still nowhere near perfection, in my opinion, and bridging the last 5% may well take many more years. Real-world language is just too messy and nuanced, and that’s what makes speech and text fun.